In the keynote, in which the iPhone 11 Pro was presented, among other things, Phil Schiller gave a little insight into a feature that Apple calls "Deep Fusion". According to Phil Schiller, this is computer-aided photography combined with mad science (his quote was “computational photography mad science”). When watching the keynote, it wasn't immediately clear to me what the feature does, because good low-light photos have already been presented in the keynote in connection with the iPhone 11, so I assume that there is something more to it here .

Chapter in this post:

What exactly does Deep Fusion do?

This is exactly the question I asked myself and looked at the corresponding passage in the keynote again. For the readers who would like to watch: It starts in the keynote at the time stamp 1:21:55.

In detail, the following happens: The iPhone 11 Pro (only the version with the 3 lenses and not the normal iPhone 11 without Pro!) Takes nine pictures before the shutter button is pressed. Of these, four are short-exposure photos and four are slightly longer-exposure photos. The last photo is saved directly when you press the shutter release (this is how you get nine photos), where this is a long exposure.

Within a second of pressing the shutter release, the iPhone 11 Pro's neural engine analyzes and combines all of the individual images pixel by pixel into an image, using only the best images to add them up.

The end result is a photograph with 24 megapixels, which is characterized by a particularly high level of detail and low image noise.

According to Phil Schiller, this is the first time that the Neural Engine has been used to output photos and the term "computational photography mad science" came up in this context.

iPhone 11 Pro Deep Fusion camera: 24 MP instead of 12 MP resolution

Anyone who has looked at the technical data of the iPhone 11 Pro will surely have noticed that the three built-in cameras only have a sensor size of 12 megapixels. This was already the case with the iPhone XS, which is why the highest resolution of iPhone photos so far was 12 MP.

With the Deep Fusion camera feature, however, the iPhone 11 Pro now spits out photos with 24 megapixels, which can only be technically realized by combining several photos into one larger one and the details are created by blurring minimally during the recording . There's a photo app called "Hydra" that takes a similar approach, throwing out 32MP photos. You can sample photos here on the developer page under “Super Resolution (Zoom / Hi-Res)”.

Even if Hydra is certainly not bad, the result that can be achieved with the iPhone 11 Pro should be much more comfortable and better.

Google Night Sight alternative from Apple

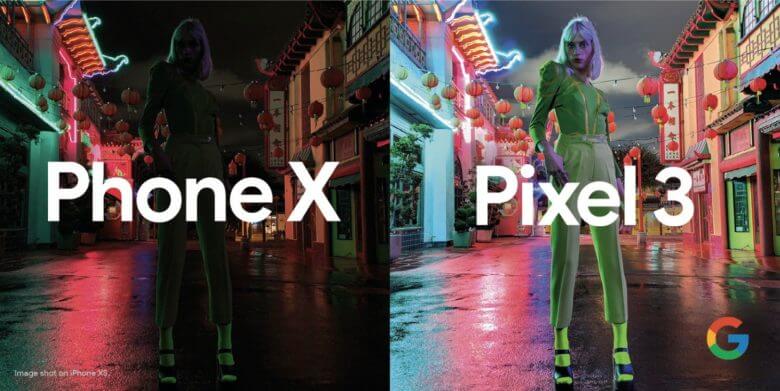

You have to admit to Google that they have put all low-light recordings from previous iPhones in the shade with the Night Sight recordings. The technical background is that - as far as I have read it - the Google Pixel smartphones allow the recordings made to be edited in the Google cloud and thus achieve the impressive quality of the photos in Night Sight mode. The statement is not correct. I read it again and learned that all editing is done on the pixel and no data is uploaded to the cloud.

With the iPhone's Deep Fusion mode, no photos wander around, and certainly not to third-party servers - unless you have explicitly set that you want them in the iCloud photo library. Apple is known for trying to always solve this kind of thing locally on the device. For this reason, Deep Fusion may not come until now that there is a new iPhone with a faster processor.

In any case, this feature should allow Apple to catch up with Google in terms of low-light shots. The only question is whether Google already has something up its sleeve to raise the bar again with the next Pixel Phone.

Google Night Sight vs Apple Night Mode

All iPhone 11 models come with a feature called Night Mode, while Deep Fusion is arguably reserved for the Pro models. Technically, the Night Mode is more similar to the Google Night Sight mode, since both modes are based on longer exposure times and then calculate the photos together, similar to the HDR mode. With Deep Fusion, on the other hand, there seems to be a lot more “technical magic” going on in the background.

In this case, however, it is interesting how the night mode of the iPhone differs from the night sight mode of the pixel phones. From what I have seen in previous test reports, Apple's Night Mode seems much more natural, as the photos still clearly show that the picture was taken in the dark. In the Night Sight mode of the Pixel Phones, however, the photos look more like they were taken during the day.

From a photographer's point of view, I like Apple's approach much better, because I like it when the nighttime mood is retained in the photos, but the colors and details are still significantly better than in the night mode of the iPhone models XR, XS and XS Max.

When will Deep Fusion come to the iPhone?

I'm excited to see how Deep Fusion performs in practice. According to Phil Schiller, it is not only suitable for taking pictures in poor lighting conditions, but can also achieve impressive results in better light.

However, with the delivery of the iPhone 11 Pro models, Deep Fusion is not yet available. Apple will only deliver it to the Pro models with a software update this fall.

Related Articles

Jens has been running the blog since 2012. He acts as Sir Apfelot for his readers and helps them with technical problems. In his spare time he rides electric unicycles, takes photos (preferably with the iPhone, of course), climbs around in the Hessian mountains or hikes with the family. His articles deal with Apple products, news from the world of drones or solutions to current bugs.

"The technical background is that - as far as I've read - the Google Pixel smartphones can edit the pictures taken in the Google cloud and thus achieve the impressive quality of the photos in Night Sight mode." - What nonsense, that would mean that Night Sight would not work if you were offline. Also, no one has to upload their photo anywhere. Source for such claims please.

Hello Mario! You are absolutely right. I just read again on English blogs about Night Sight and it doesn't say anywhere that the photos are edited in the cloud. So it all happens on the Google Pixel. I am correcting the passage at the top of the article. Thank you for your hint. I had heard this statement on a podcast, but they were also apparently misinformed.